- Posted on: 2023-02-14

Customers often ask us: “Should we be recording ‘X’ and ‘Y’ with our test system?” This blog posts describes the rational for our position which is in the conclusion. I’m sure you’ll be able to guess the answer as you read about the various factors at stakes here.

In most test systems, engineers design the custom control that will record the data needed for the actual test measurement during a test. This can amount to a few kilobytes to many Megabytes per test. This is technically the requirement for the system but it doesn’t help deliver information when tests fail. Was it because the operator applied too much torque during setup? Was the power supply current limit too low? Was the DUT retested for the 8th times without time to cool down? These are just a few of the easiest questions to think of and could easily be answered if more data had been recorded. When we deal with embedded products with dozens of IOs and hundreds of registers, the questions quickly become more complicated.

You can never go back in time and record data after the test is done so it’s best to include data logging into your application as soon as possible. Our engineers at Synovus never know what questions our clients will ask months into the projects but we know there will be some. Those generally sound like: “That signal seems to be rising slower than expected. When did this start.” or “Those 2 signals are not firing in the expected order. Has it always been this way?” If the datasets are already on disk, it is very easy to pull one file per month and show engineers the 5 signals of interest through a few test sequences.

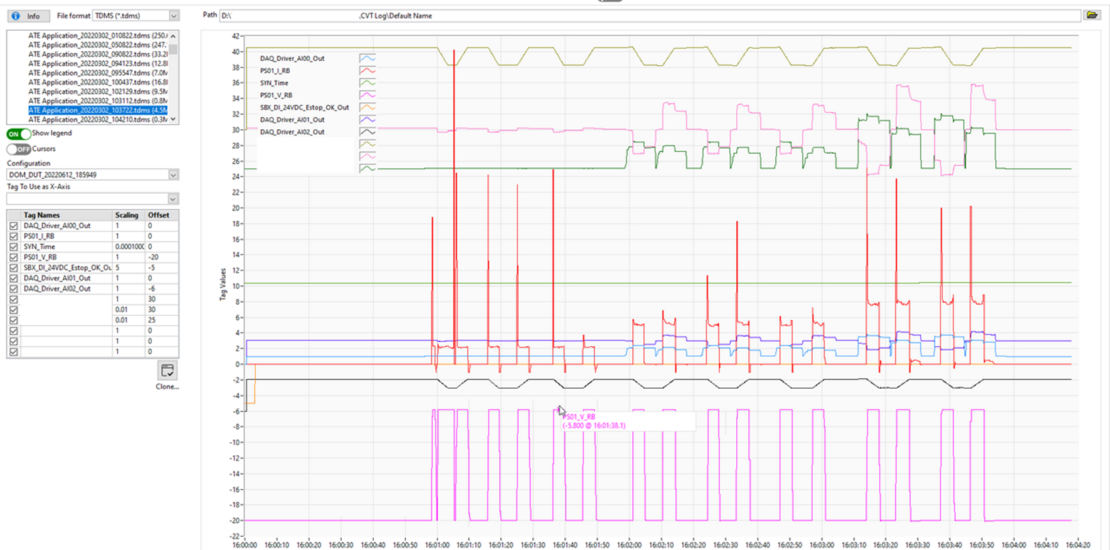

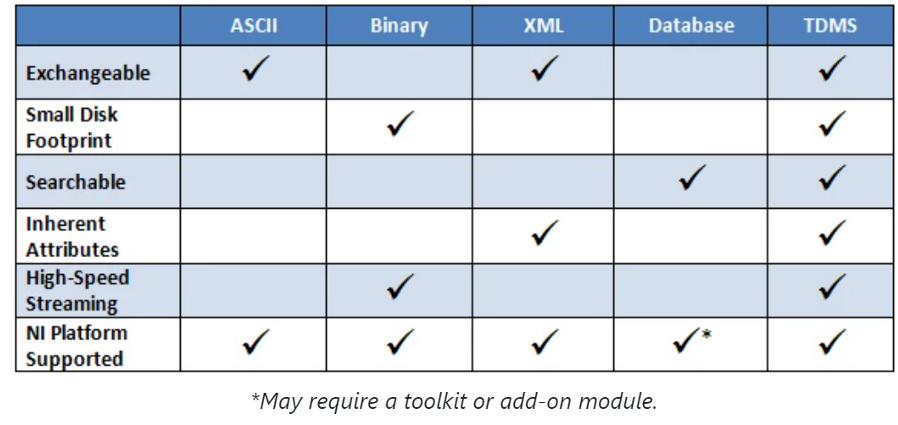

In our typical ATE solutions powered, Symplify continuously streams to disk the value of every Tag multiple times per second. This includes all the typical analog and digital values but it also includes CPU percentage, RAM usage, user interactions (yes, we even record the position of the mouse…) and many other parameters that can help identify issues tomorrow or in 16 months. In 95% of projects, we have used this data to help customers find out when a specific problem occurred, how a measurement evolved over time or identify a relationship between a sensor reading and ambient temperatures. The best way to store data to disk is to use NI TDMS file format, optimize for easy access and the data can later be tagged with metadata and processed by Excel, LabVIEW, Matlab and many other tools.

With only those few examples, Synovus belief is unambiguous: there cannot be too much data stored on disk for a given test system. Whether 4TB of disk space gives you 3 months or 36 months of log data (typical), the moment you need to answer a question requiring historical data, this $100 disk drive will become one of the most valuable assets. In some cases, our clients estimated that this information saved their entire engineering teams months of development… so $100 is a lot less than the estimated $342k… We are not arguing that 99.9% of that data will likely never be used but rather that the 0.1% is well worth the small investment!

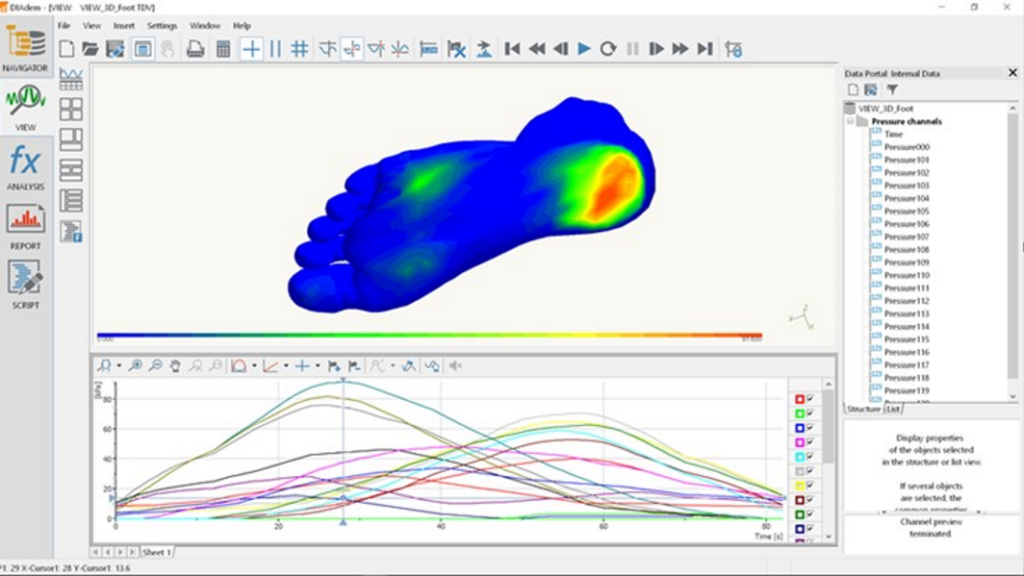

We will present more on this topic in the upcoming months as we release new features in Symplify™ TDMS viewer and publish our new tool to analyze those Terabytes of data automatically for you, finding correlations between signals during your standard test procedures. In the meantime, ask you engineer to start logging more data and if you already have NI Test Workflow, give Diadem a try with your data file. It can handle CSV and other formats much faster than Excel.

Learn more about Symplify and try Symplify Now! for yourself. TDMS viewer is included!